Optimization of RAG-Based LLM Inference Systems

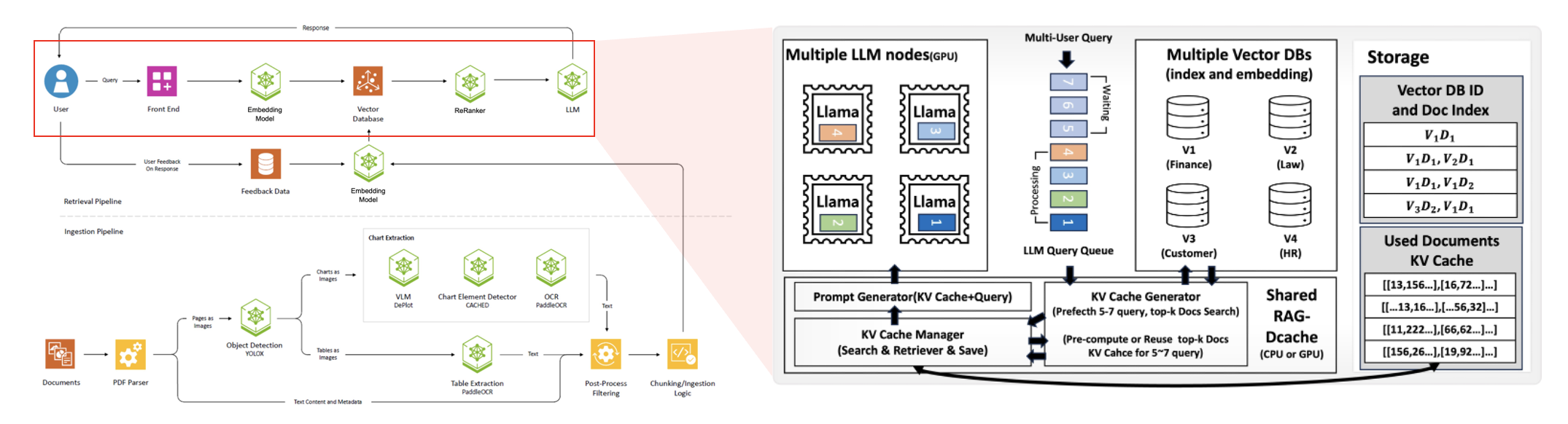

With the rapid proliferation of large language models (LLMs) in various services, Retrieval-Augmented Generation (RAG) has garnered significant attention as a means to improve accuracy and response quality. RAG combines relevant information retrieved from an external knowledge base (e.g., documents, databases, or search engines) with an LLM to produce richer and more factual responses. This approach has the advantage of integrating domain knowledge (in areas like healthcare, finance, manufacturing, etc.) into the model with minimal fine-tuning. However, deploying RAG-based LLM inference faces challenges in efficiently utilizing massive computational resources (e.g., GPUs) from the retrieval stage to the generation stage. These challenges include large memory bandwidth requirements, high communication overhead, and complex data flows. To address these issues, our lab research focuses on system-level optimizations in High-Performance Computing (HPC) and cloud environments like heterogeneous resource allocation (GPU, FPGA, etc.) and parallelization to reduce bottlenecks in RAG retrieval and LLM generation, minimizing data movement overhead with high-bandwidth memory (HBM) and high-speed interconnects (e.g., NVLink, InfiniBand), hardware-friendly kernel tuning (e.g., CUDA kernel optimizations, FPGA RTL design) to handle high concurrency with stable latency, KV cache caching/pre-fetching, and collaborative scheduling for end-to-end RAG retrieval and LLM inference in distributed systems.

Distributed Deep Learning System in HPC and Cloud

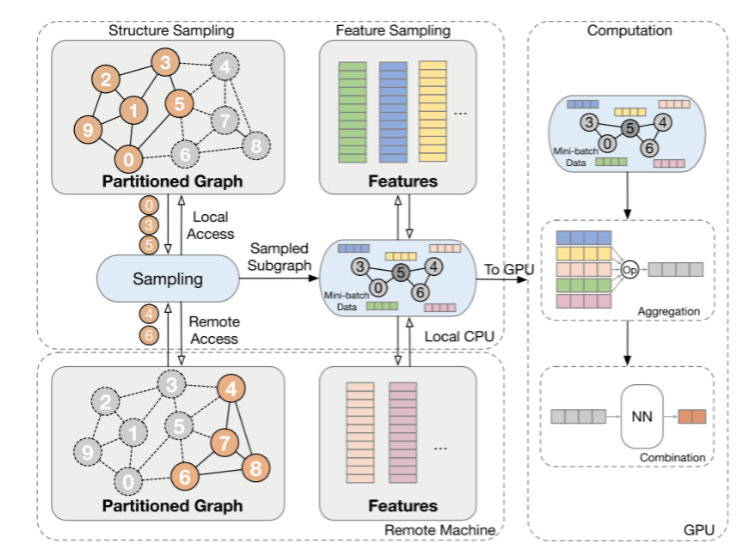

Image Source: : L. Huang et al., "Practical Near-Data-Processing Architecture for Large-Scale Distributed Graph Neural Network," IEEE Access, vol. 10, pp. 46796-46807, 2022.

Recently, deep learning has been playing a crucial role not only in image and language processing but also in social network analysis and recommendation systems. In particular, Graph Neural Network (GNN) models have been gaining attention in these fields. GNN is a deep learning model specialized in interpreting and learning complex graph structures that involve nodes, edges, and their interactions. Due to these characteristics, GNN demonstrates its value in various data represented as graphs, such as user relationship analysis in social networks, predicting properties and reactions of molecular structures, and building personalized recommendation systems. Moreover, GNN effectively combines with distributed deep learning techniques to handle large-scale datasets and complex model structures, maximizing the model's performance and scalability. Distributed learning is implemented through two main methods: model parallelism and data parallelism. A high-performance cluster computing environment is essential for distributed learning. High-performance hardware like GPUs, CPUs, memory, networks, and storage have become the de facto standard for reducing deep learning training time. Based on this technological background, our research lab conducts software optimization research to minimize training time by economically utilizing available hardware resources for various deep learning training and inference tasks, including vision, language, and graph models.

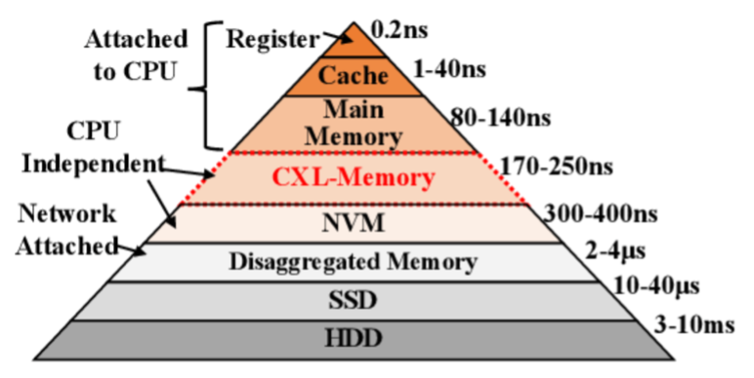

Memory and Storage Architecture for Deep Learning Workloads

Compute Express Link™ (CXL™) is an industry-supported cache coherence interconnect for processors, memory expansion, and accelerators. CXL technology maintains coherence between CPU memory space and memory of connected devices, enabling resource sharing for high performance, reduced software stack complexity, and overall system cost reduction. As accelerators such as GPUs are increasingly used to complement CPUs for emerging applications like artificial intelligence and machine learning, CXL is designed as an industry-standard interface for high-speed communication and has become an industry standard. With CXL, the existing memory hierarchy is becoming deeper, making research on system software applied to operating systems and distributed computing environments increasingly important. Additionally, CXL has been recognized as a highly effective technology for artificial intelligence workloads (such as recommendation models) by providing an expanded, large memory space beyond the limited GPU and CPU memory of the host. In our research lab, we conduct studies to enhance the efficiency of deep learning models for large-scale data-based Graph Neural Network (GNN) based recommendation systems in a disaggregated computing environment like CXL. This involves research on memory, storage, and I/O optimization for improved learning performance and cost-effective computing infrastructure.

Object Store for AI and Big Data Analytics Acceleration

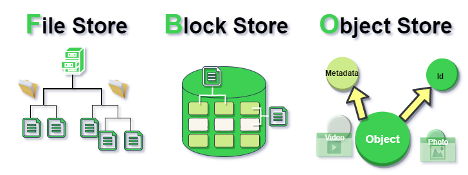

Image Source: : https://rajkumaraug20.medium.com/file-storage-vs-block-storage-vs-object-storage-2519031a2646

Object storage is a technology for storing and managing data in a format called objects. Modern organizations generate and analyze large amounts of unstructured data such as photos, videos, emails, web pages, sensor data, and audio files. Cloud object storage systems distribute this data across multiple physical devices but allow users to efficiently access content from a single virtual storage repository. Metadata plays a crucial role in object storage technology. In object storage, objects are kept within a bucket and not as files within folders. Instead, object storage combines data chunks that make up a file, adds all user-created metadata to that file, and assigns a user-defined identifier. This creates a flat structure called a bucket instead of hierarchical or layered storage. As a result, objects in a bucket can be retrieved and analyzed based on functions and attributes, regardless of file type or hierarchy. Object storage, not constrained by types, is an ideal storage solution for data lakes that need to accommodate various types of data. Additionally, object storage is suitable for cloud-native applications, large-scale analytics, and machine learning, providing cost-effective, high-capacity data storage beyond data lakes. This contrasts with traditional storage systems that have hierarchical abstraction structures based on files and directories, which fail to properly reflect the requirements of modern applications. This difference originates from fundamental storage design principles that depart from designing data storage based on the concept of files, starting from the input-output unit called blocks. Modern storage systems are at a transitional stage from traditional file-based storage to more flexible and modern object storage systems suitable for contemporary use cases. In line with this, our research lab investigates not only block and file storage management technologies but also object storage-based storage technologies, researching and implementing storage designs optimized for artificial intelligence and machine learning.

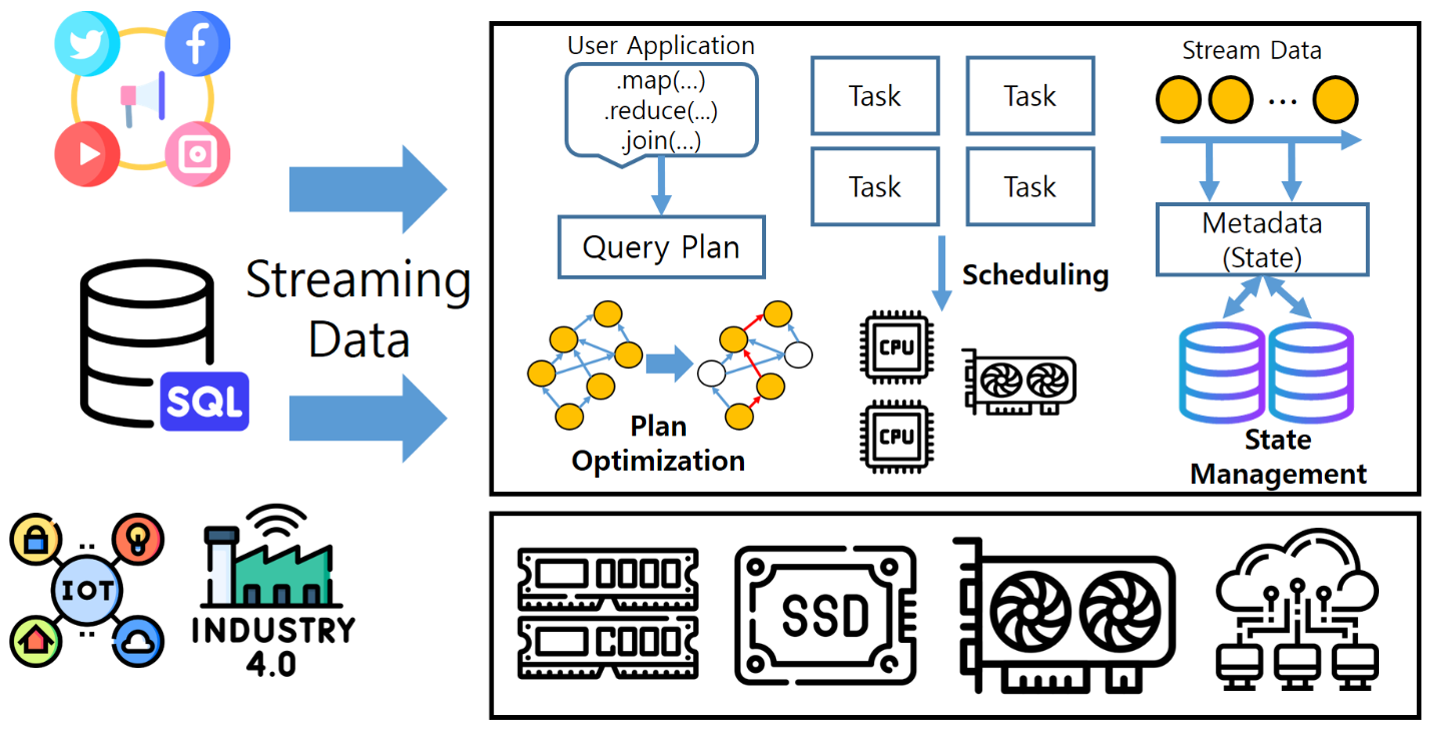

Streaming System Optimization for Query Acceleration

Recently, there has been an increase in services that rapidly analyze large volumes of real-time data incoming from various sources such as social networks, financial transactions, and IoT sensors, and deliver it to users. To achieve this, in-memory-based streaming processing platforms have emerged, which load streaming data into memory for computation. Users execute streaming applications by writing queries combining arbitrary operators (such as map, reduce, join). Subsequently, the streaming system transforms the user's queries into a graph structure and generates the optimal query execution plan. Furthermore, it accelerates data processing by dividing each operator into multiple tasks and assigning them to each CPU core for parallel processing using the map-reduce approach. Meanwhile, heterogeneous computing infrastructures, including cloud and various computing infrastructures, are increasing. However, existing streaming platforms are not optimized for these computing environments, limiting their performance potential. In our research lab, we are conducting studies on optimizing streaming systems considering the characteristics of heterogeneous computing infrastructures (such as CPU, GPU) and accelerating query processing through research on query execution plans, task scheduling, and state management.

Blockchain System Optimization

Blockchain is a form of distributed database technology via a network, where data is stored and managed by all participants in the network rather than on a single central server. To achieve this, users store and manage all generated data by connecting them like a chain over time. In order to tamper with the data, it would practically be close to impossible since all participating users' data would need to be modified. Therefore, blockchain is a decentralized distributed system without the need for a central server, allowing data to be stored securely and transparently. However, blockchain requires all users to store all data, leading to a disadvantage of occupying a significant amount of overall storage space compared to centralized systems. On the other hand, Erasure Coding is a technology that divides the original data into multiple chunks and generates multiple parity chunks for fault tolerance, ensuring the recovery of the original data against loss up to the number of parity chunks. Erasure coding improves storage efficiency by encoding the data and deleting a certain number of parity chunks to enhance storage space efficiency. In our research lab, we analyze the trade-off between storage space efficiency improvement and resource consumption of Erasure Coding and research space-optimized blockchain systems with erasure coding applied accordingly.